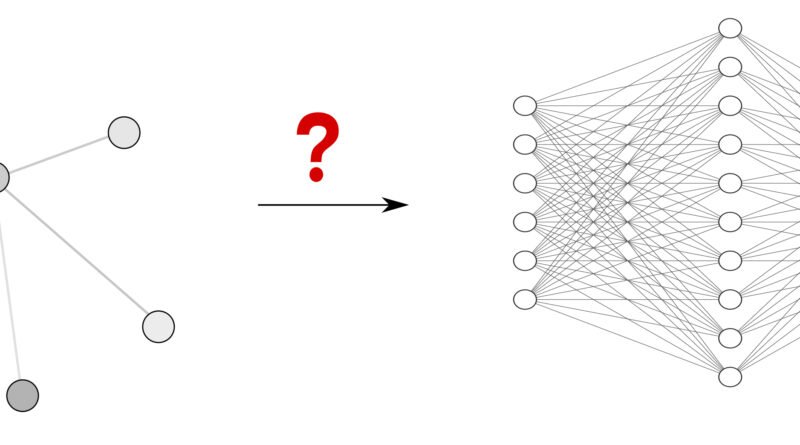

SYMBOLIC-MOE: Mixture-of-Experts MoE Framework for Adaptive Instance-Level Mixing of Pre-Trained LLM Experts

Like humans, large language models (LLMs) often have differing skills and strengths derived from differences in their architectures and training

Read More